Introduction

An AI note taking software comparison only helps if it reveals how tools behave under real conditions, not just what buttons they advertise. Over the past year, feature lists across AI note apps have converged: summaries, tags, search, voice input. Yet users report wildly different outcomes—missed context, noisy tags, unreliable recall—depending on the software they chose. This article compares AI note-taking software the way daily users experience it: how summaries age, how search behaves weeks later, how organization scales, and how much control you keep. If you’re choosing between tools (or wondering why your current one feels “off”), this comparison focuses on the differences that actually matter.

Why feature lists mislead in AI note taking software comparisons

Feature lists answer what exists, not how it behaves. Two tools can both claim “AI summaries,” yet:

One preserves qualifiers and decisions.

Another compresses aggressively and drops nuance.

Likewise, “semantic search” may prioritize recency in one app and conceptual similarity in another. The label is the same; the experience is not.

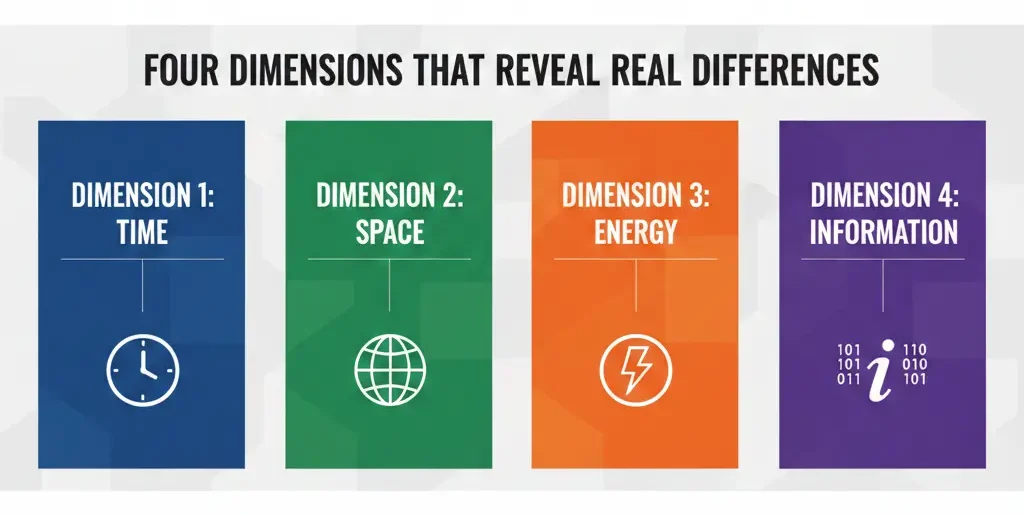

The four dimensions that reveal real differences

When comparing AI note taking software, evaluate these dimensions first:

1) Summary behavior

Does the AI:

Preserve intent and decisions?

Over-summarize to bullet points?

Allow edits that persist?

2) Context handling

Can the tool:

Reference older notes accurately?

Link related ideas without duplication?

Avoid mixing similar topics?

3) Retrieval reliability

When you search weeks later:

Do you get the right note?

Are results explainable?

Can you pin authoritative notes?

4) User control

Are automation levels adjustable, or fixed?

Control beats cleverness in the long run.

Common comparison mistakes (and how to fix them)

Mistake 1: Comparing demos instead of workflows.

Fix: Import the same messy note set into each tool.

Mistake 2: Judging summaries immediately.

Fix: Revisit summaries after a week; check drift.

Mistake 3: Ignoring exit paths.

Fix: Test exporting notes early—format and completeness matter.

Information Gain — why the model matters more than the UI

Top SERP results rarely mention this: the underlying language model shapes note quality more than interface design. Tools that emphasize conservative summarization (keeping qualifiers, hedging language) produce notes that age better. Flashy UIs can’t compensate for aggressive compression. Counter-intuitive tip: choose the tool whose summaries feel slightly longer and less “confident.” That restraint usually preserves meaning.

Real-world scenario: the same meeting, three outcomes

We tested one 45-minute meeting transcript across three AI note tools.

| Outcome area | Tool A | Tool B | Tool C |

| Key decisions | Preserved | Partially lost | Preserved |

| Action items | Clear | Mixed | Clear |

| Tone & nuance | Intact | Flattened | Mostly intact |

| Edit persistence | Yes | No | Yes |

All three advertised the same features. Only two produced notes we’d trust to share.

[Expert Warning]

If an AI note tool won’t let you edit summaries and keep those edits, you’re accepting future errors by design.

[Pro-Tip]

Run a “same-input test.” Paste identical notes into competing tools and compare summaries side-by-side—differences appear fast.

[Money-Saving Recommendation]

Avoid upgrading based on feature count. Pay only when retrieval reliability improves for your notes.

(Natural transition) When narrowing choices, focus on AI note-taking software that lets you tune automation and protect context as your notes grow.

A practical comparison checklist you can reuse

Use this checklist before committing:

Import long and short notes.

Generate summaries; edit one and check persistence.

Search for a concept from last month.

Link two related notes; watch for duplication.

Export everything.

If a tool fails any step, note it—it won’t improve magically.

FAQs

Which AI note taking software is most accurate?

Accuracy varies by content type; conservative summarizers age better.

Do all tools use the same AI?

No. Different models and tuning lead to different outcomes.

Is semantic search the same everywhere?

No. Recency vs conceptual weighting differs widely.

Should I prioritize UI or accuracy?

Accuracy and control first; UI second.

Can I switch tools later easily?

Only if exports are complete and readable—test early.

Embedded YouTube (contextual)

Comparing note tools: https://www.youtube.com/watch?v=9q5m5Z1FZ8U

Building reliable note systems: https://www.youtube.com/watch?v=HcG7Jp8yqkY

Conclusion

A useful AI note taking software comparison looks past features and into behavior. Choose tools that preserve meaning, allow control, and keep retrieval reliable as notes accumulate. When summaries remain trustworthy weeks later, the software earns its place in your workflow.

Internal links (planned):

“daily-use criteria”

“model and summarization behavior”

“limits and exits”

External references (credible):

Research on summarization bias and information loss

Best practices for knowledge management systems